Avoid the unexpected charges with Firebase and Node 10

Learn what Firebase saves in the bucket called artifacts and how to clean it up to avoid excessive charges when using Node 10 with Cloud Functions.

Google has announced the end of life for Node 8 support on Cloud Functions sometime in summer 2020. At first, it wasn't a big deal, especially with good support for Node 10 functions, and the upgrade process was painless (well, most of the time).

Suddenly, a couple of months later, some longer-running projects started incurring unexplained charges for Firebase Storage exceeding the free tier (5GB) without any more files being stored in the bucket than usual. What gives?

When you update the functions that you deploy on Cloud Functions to Node 10, it isn't just a simple version bump. Since version 10, Google has completely refactored the way they work with Cloud Functions. From this version on, Google uses Cloud Build to deploy your functions which in turn uses Google's own Container Registry to store instances of the functions in a new Cloud Storage Bucket called [region].artifacts.[your-project-name].appspot.com

Why is Google storing a large amount of data in a bucket called artifacts?

According to the Firebase FAQ, saving deployment images gives you the following:

Using Cloud Build, Container Registry, and Cloud Storage provides the ability to:

• More easily see and debug issues through detailed function build logs in the GCP console.

• Use build time that exceeds the current build quota of 120 build-mins/day. • View a built container image for your function in Container Registry.

Honestly, I'm not sure this is worth it. Let's see how this change can affect your bill a couple of months down the line. Each time you deploy a function, its snapshot will be saved to the artifacts Storage Bucket, which adds up quite a lot over time. Especially in the development environments where we tend to deploy a lot of stuff to test and given that Google just decided to do this without much notice. Here's an example:

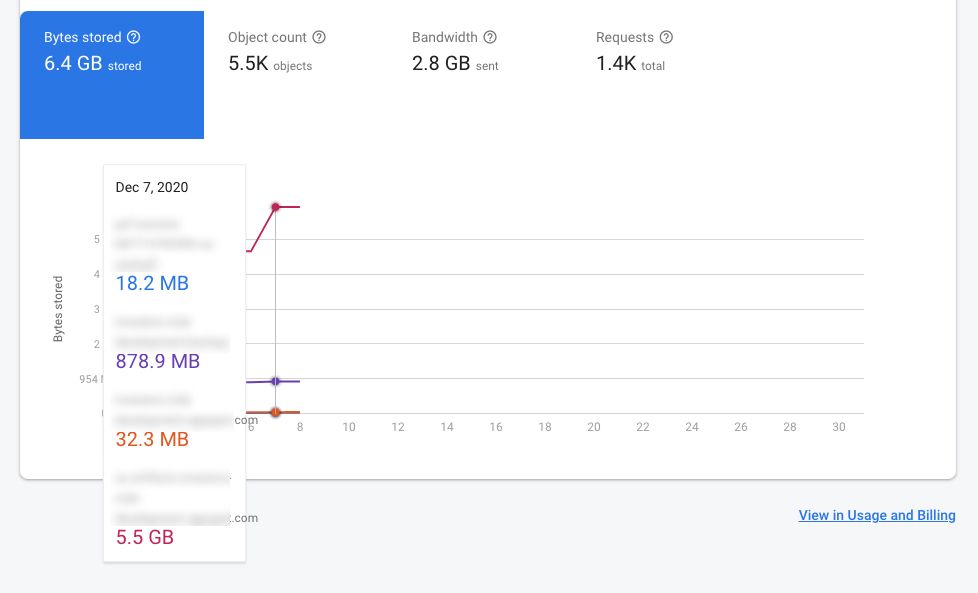

In just a month, we've spent the free tier of storage on our development environment for our long-running project. Note that nothing else in the dev environment didn't come even close to spending the free limit since there's hardly any activity and only test data there.

Note that the visible spike is from a single day of a heavy deployment of many functions.

In a single day, we've spent 1.2GB of 5GB of free storage on our project only by deploying functions.

And this is not something that you easily figure out without actively looking for it. As it stands now, out of 6.4GB used on this project, 86% of storage is used for Cloud Functions snapshots, 14% is used for backup and less than 1% is the actual storage used for the app functionality.

This, of course, incurs a charge. Currently, it sits at $0.03, which left unchecked can easily increase, especially since Google keeps this in your Cloud Storage forever unless you delete it.

How to deal with this?

Fortunately, this is relatively easy to maintain, once you know what you're looking for.

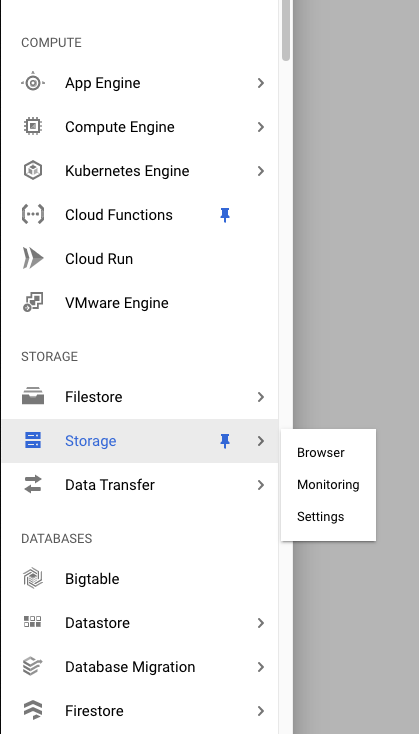

First up, open the Storage section in Google Cloud Platform:

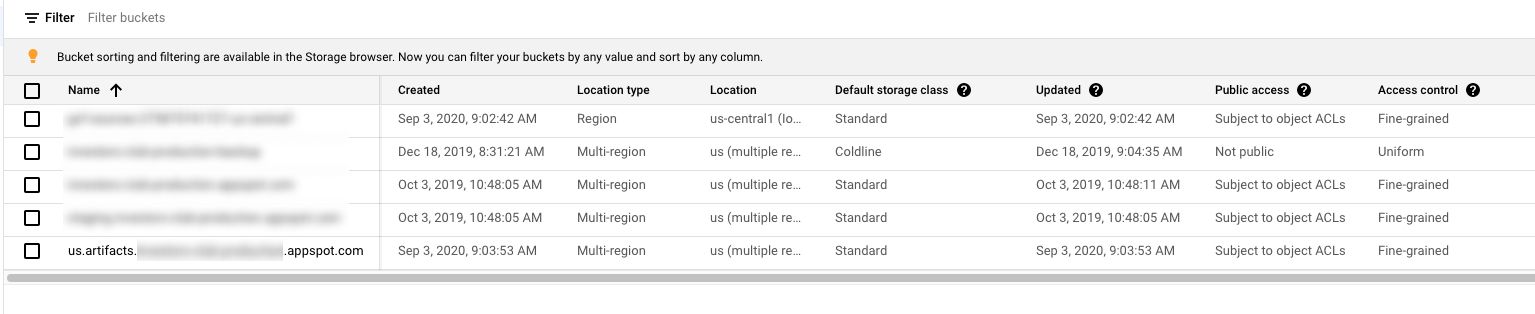

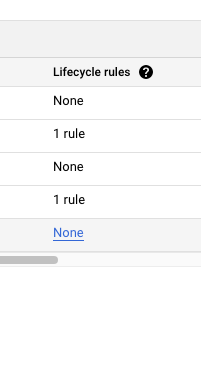

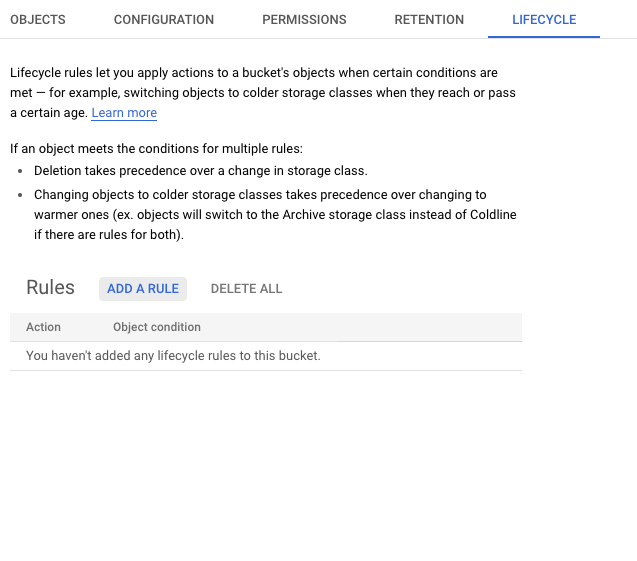

Find the [region].artifacts.[projectId].com bucket, and under click on Lifecycle rules column:

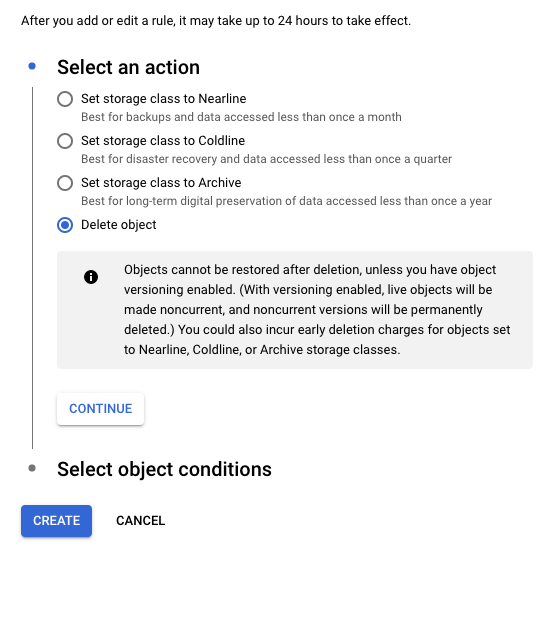

Now, let's click on add a rule button.

Select Delete object as a lifecycle action, and click Continue to setup deletion rules.

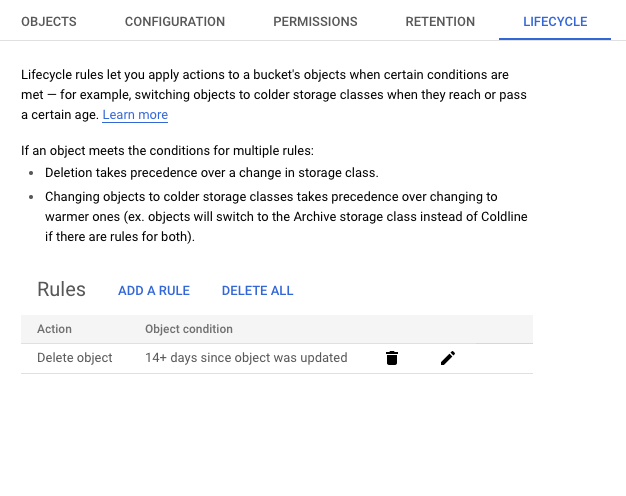

Select age and enter the number of days you want to keep content in this storage bucket. You can start with 14 days and lower the number if you need to save more space. And that's it. After Google catches up with your changes, they should remove the images older than 14 days.

Please double-check what you're doing when setting lifecycle rules. If you set the rules on the wrong bucket, you could lose older data you don't want to delete. Use at your own risk.

There's a discussion in Google Groups where some reps from Firebase say that old images are cleaned up but that doesn't seem true or they are not cleaning this up often enough, at least not before it racks up a charge for you.

I'd like to hear if there's a reason to keep this content indefinitely, but so far I can't seem to find one. If they enable us to revert to the previous version or maintain two different versions of functions at the same time that could be useful, but it still makes sense only for a couple of latest versions, not full deployment history. What do you think?

If you want to avoid this kind of files ever creating, Firebase team has recently released a new and updated Emulator Suite which makes it possible to test everything with functions locally and deploy only when it's production-ready, which should minimize the number of Cloud Function images created. You can read more about it here.

Hope this clears up some stuff for you and saves you a couple of $$. Feel free to share your thoughts in the comments or drop us an email at hello@prototyp.digital. 😄