ChatGPT is down. Now what?

How to Set Up a Local LLM with Ollama, Docker and Web UI.

I know, I know — why bother setting up your own local LLM when ChatGPT is right there? Well, maybe because it’s not nearly as complicated as you think. With a Mac or PC, it's a walk in the park. Stop putting it off.

Worried about getting stuck? I’ve got your back.

- Detailed Documentation: I provide links to comprehensive documentation and resources that will help you understand each step and troubleshoot any potential issues.

- Community Help: There are robust communities, forums, and GitHub repositories where you can seek help, share experiences, and learn from others who have already walked the path.

- Troubleshooting Tips: I’ve included a section specifically for common issues and troubleshooting tips, so you’re never left wondering where things went wrong.

Ready to Get Started? Let’s Do This.

Just follow it step-by-step, and you’ll have a powerful LLM running locally in no time. It’s time to take control of your AI needs without depending on external services. Let’s dive in, and don’t forget – you can thank me later.

1. Set up Ollama

Ollama is an open-source platform for running large language models locally.

Download Ollama:

Visit ollama.ai and download the app for your operating system (macOS, Linux, Windows [preview]).

Run the Local Model:

After installation, open your terminal and run:

ollama run llama 3

This command will start the local LLM.

You can already interact with the LLM via terminal, but you want that sleek chat UI, right? On to the next step...

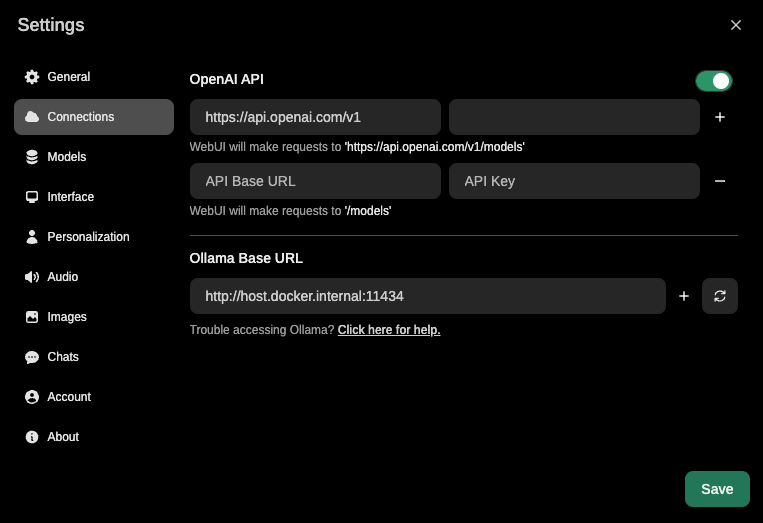

2. Set up Your Chat Interface via Open WebUI

Open WebUI is a web-based interface for interacting with Ollama's models.

This part requires Docker Desktop, so go ahead and install it before you continue onto the next step.

Run Open WebUI via Docker:

Open your terminal and enter:

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

Accessing Open WebUI:

Open your browser and go to http://localhost:3000/.

Updating WebUI:

To keep your WebUI up to date, use:

docker run --rm --volume /var/run/docker.sock:/var/run/docker.sock containrrr/watchtower --run-once open-webui

Tip: You can integrate your OpenAI API key within WebUI to use ChatGPT even when chat.openai.com is down. That's the beauty of it.

Documentation for Your Convenience

- Ollama Docs: Ollama GitHub Repository

- Open WebUI Docs: Open WebUI Documentation

Alternative to Open WebUI

Enchanted (Native macOS App)

- Available via the App Store

- Note: This alternative does not support API access to ChatGPT. I don't use it, but it is handy if you don’t want to install Docker and WebUI. You win some, you lose some.

Actual and Practical Applications and Benefits of Running Local LLMs

Running large language models (LLMs) locally offers numerous advantages, especially for designers and developers who often require swift, secure, and customizable AI solutions. Here are some key practical applications and benefits:

- Data Privacy and Security: Ensures sensitive information remains protected.

- Faster Response Times: Provides instant responses essential for real-time applications.

- Reduced Dependency on External APIs: Guarantees reliability and continuous access.

- Customization and Control: Allows for tailored solutions and system optimization.

- Cost Efficiency: Offers long-term savings by eliminating recurring API costs.

Embracing local LLMs empowers you to build more secure, responsive, and custom AI applications, ultimately enhancing productivity and innovation in your projects.

System Requirements and Prerequisites

For Ollama:

Minimum System Requirements:

- Operating System: macOS, Linux, or Windows (Preview)

- Processor: A modern 64-bit CPU (Intel i5/i7/i9, AMD Ryzen, Apple M1/M2)

- Memory: At least 8 GB of RAM (16 GB or more recommended for better performance)

- Storage: At least 10 GB of free disk space (SSD recommended for faster performance)

Prerequisites:

- Python: Ensure you have Python 3.6 or later installed.

- Terminal/Command Line Interface: Basic familiarity with using the terminal or command line interface on your operating system.

For Docker and Open WebUI:

Minimum System Requirements:

- Operating System: macOS, Linux, or Windows 10/11 with WSL 2 (Windows Subsystem for Linux) for proper Docker Desktop integration

- Processor: A modern 64-bit CPU (Intel i5/i7/i9, AMD Ryzen, Apple M1/M2)

- Memory: At least 8 GB of RAM (16 GB or more recommended for better performance)

- Storage: At least 10 GB of free disk space

Prerequisites:

- Docker Desktop:

- Download and install Docker Desktop from Docker for your operating system.

- Follow the installation instructions for Docker Desktop and ensure it is properly configured and running.

- Terminal/Command Line Interface:

- Basic familiarity with using the terminal or command line interface on your operating system.

Special Considerations for Windows Users:

- WSL 2: Docker Desktop for Windows requires the Windows Subsystem for Linux (WSL 2). Make sure WSL 2 is enabled and properly configured.

- Follow the instructions here to set up WSL 2: Install WSL.

Common Issues and Troubleshooting Tips

Even though setting up Ollama and Docker is straightforward, you might run into some hiccups along the way. Here are some common issues and troubleshooting tips to help you out:

1. Docker Installation and Configuration Issues

Issue: Docker Desktop doesn’t start or shows errors.

Troubleshooting Tips:

- For Windows Users: Ensure that WSL 2 is installed and configured. Follow the official guide to set up WSL 2: Install WSL.

- Check System Requirements: Make sure your system meets the requirements for Docker Desktop.

- Restart Docker and Your Computer: Sometimes, a simple restart can resolve installation issues.

- Administrative Privileges: Ensure that Docker Desktop is running with administrative privileges.

2. Docker Container Issues

Issue: Docker container for Open WebUI fails to start or crashes.

Troubleshooting Tips:

- Check Docker Logs: View container logs for errors using:

docker logs open-webui

- Sufficient Resources: Make sure your system has enough CPU and RAM allocated to Docker.

- Port Conflicts: Ensure that port 3000 isn’t being used by another application. You can change the port mapping in the Docker run command if needed:

docker run -d -p 3001:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

3. Ollama Installation Issues

Issue: Ollama command not found or errors during running the model.

Troubleshooting Tips:

- Path Configuration: Ensure that Ollama is properly installed and that the installation path is included in your system’s PATH variable.

- Verify Installation: Reinstall Ollama to make sure all components are correctly installed.

- Check Dependencies: Make sure you have Python 3.6 or later installed, and that it’s properly configured in your system.

4. Performance Issues

Issue: Slow performance or lag when interacting with the LLM.

Troubleshooting Tips:

Allocate More Resources to Docker: Ensure Docker is allocated sufficient memory and CPU resources. Configure this in Docker Desktop settings.

Reduce Load: Close unnecessary applications to free up system resources.

Check Hardware: Ensure your computer meets the recommended hardware requirements (e.g., SSD, 16 GB RAM).

5. Network Issues

Issue: Unable to access Open WebUI via localhost.

Troubleshooting Tips:

Correct Ports: Confirm that you are using the correct port (http://localhost:3000/).

Firewall Settings: Check if your firewall settings are blocking the connection.

Restart Docker Container: Sometimes restarting the container can resolve connectivity issues. Use:

docker restart open-webui

6. Errors Related to Open WebUI

Issue: Errors or incomplete functionalities in Open WebUI.

Troubleshooting Tips:

Update Open WebUI: Make sure you’re using the latest version of Open WebUI. Update using:

docker run --rm --volume /var/run/docker.sock:/var/run/docker.sock containrrr/watchtower --run-once open-webui

Check Documentation: Refer to the official documentation for any version-specific steps or updates that need to be performed: Open WebUI Documentation.

7. General Tips

Tip: Always keep your software up to date. Regularly check for updates for both Ollama and Docker Desktop to ensure you have the latest features and bug fixes.

Pro Tip: Join community forums or GitHub issues pages for both Ollama and Open WebUI to seek help and share experiences with other users.

Follow this guide, and you’ll be well on your way to a more autonomous and efficient AI experience.